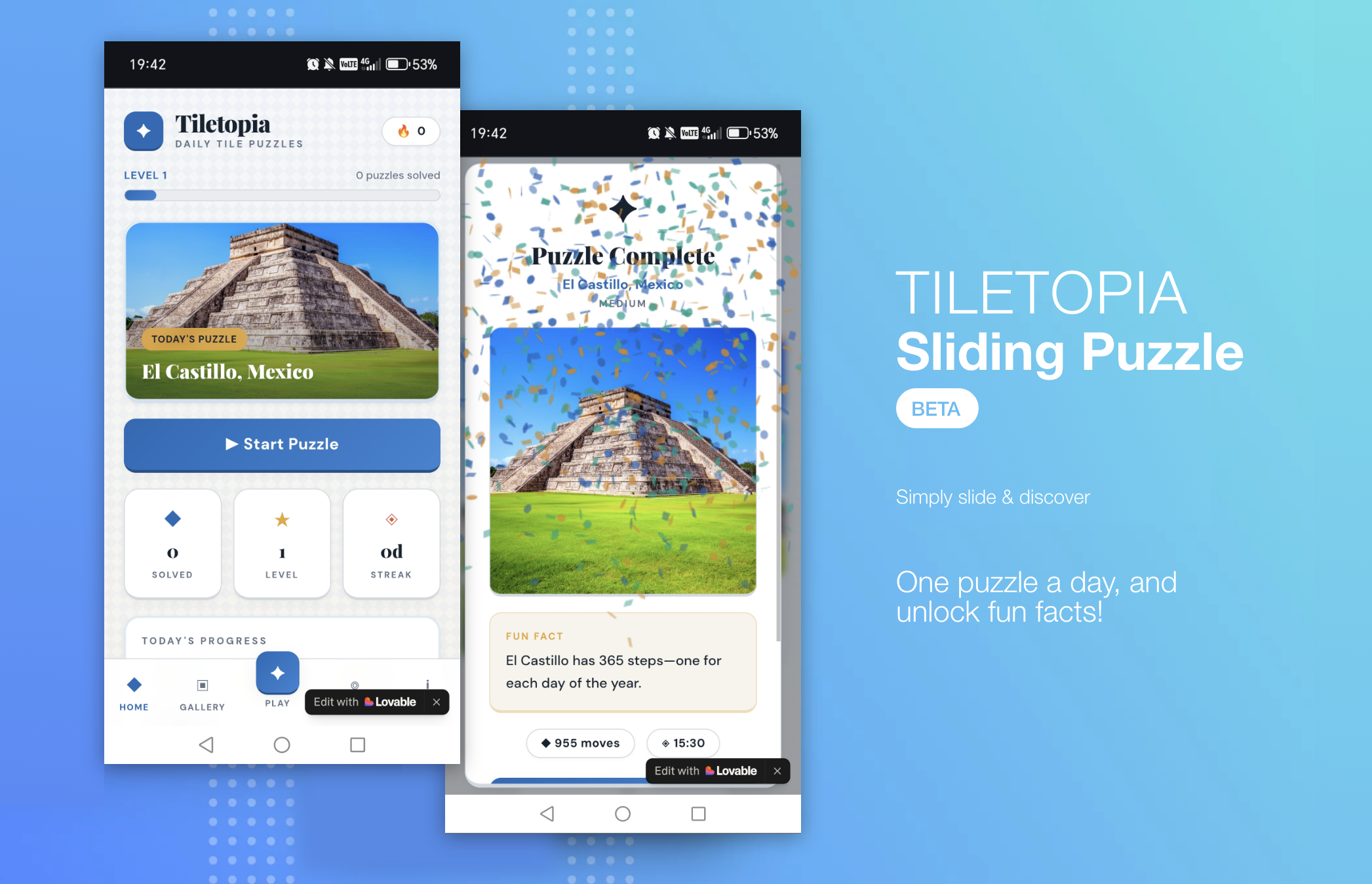

I created a trivial sliding puzzle game to stress-test AI as a design partner.

And I did it in 48 hours.

To be clar my goal wasn’t to ship a full product; it was to explore how generative AI could augment design judgment, accelerate iteration, and influence product thinking — all within the constraints of a simple MVP. Tiltopia let me make, test, and learn in a short timeframe, experimenting with AI as a Design Co-Pilot.

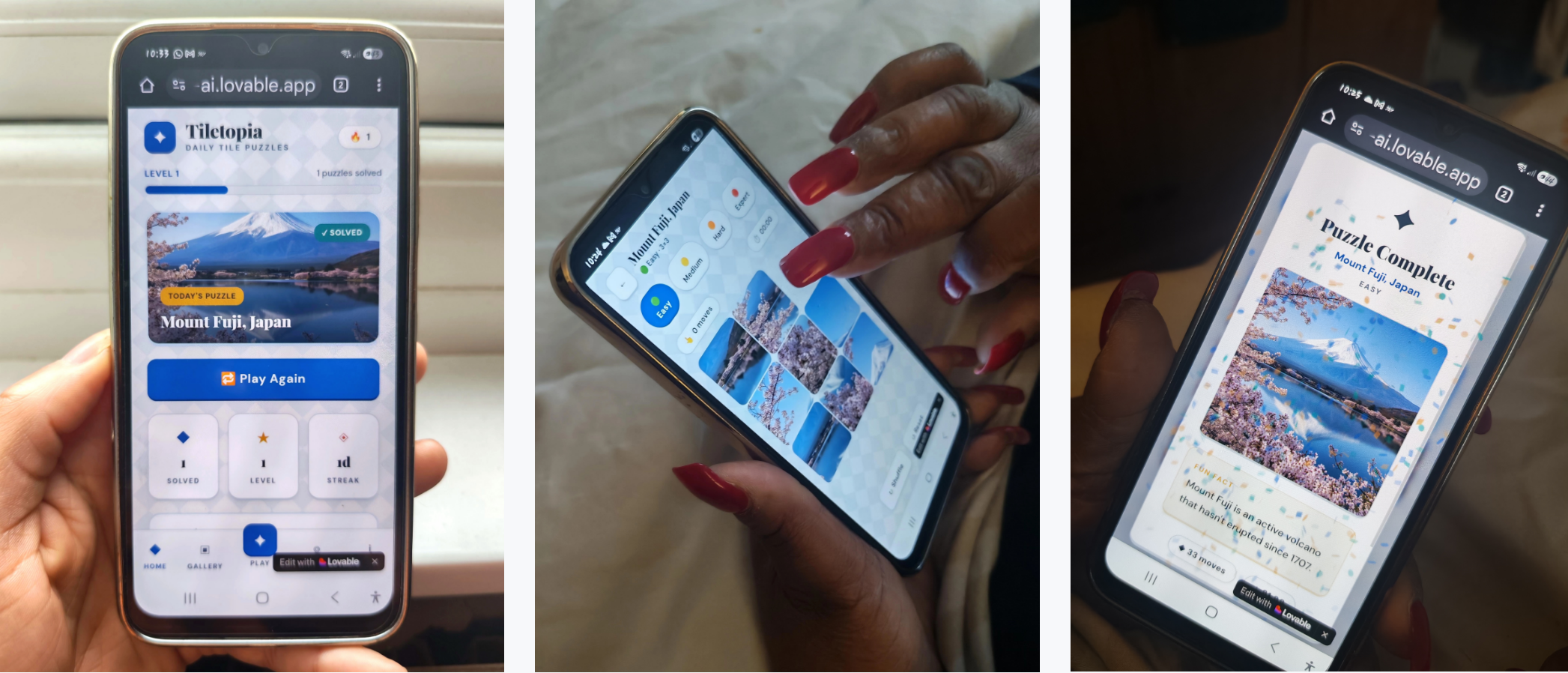

I didn’t measure success by how pretty the puzzle looked — I measured it by whether the daily loop was understandable, replayable, and felt rewarding to people I shared it with. This is the kind of low-risk sandbox I’d mandate for my team to skill up.

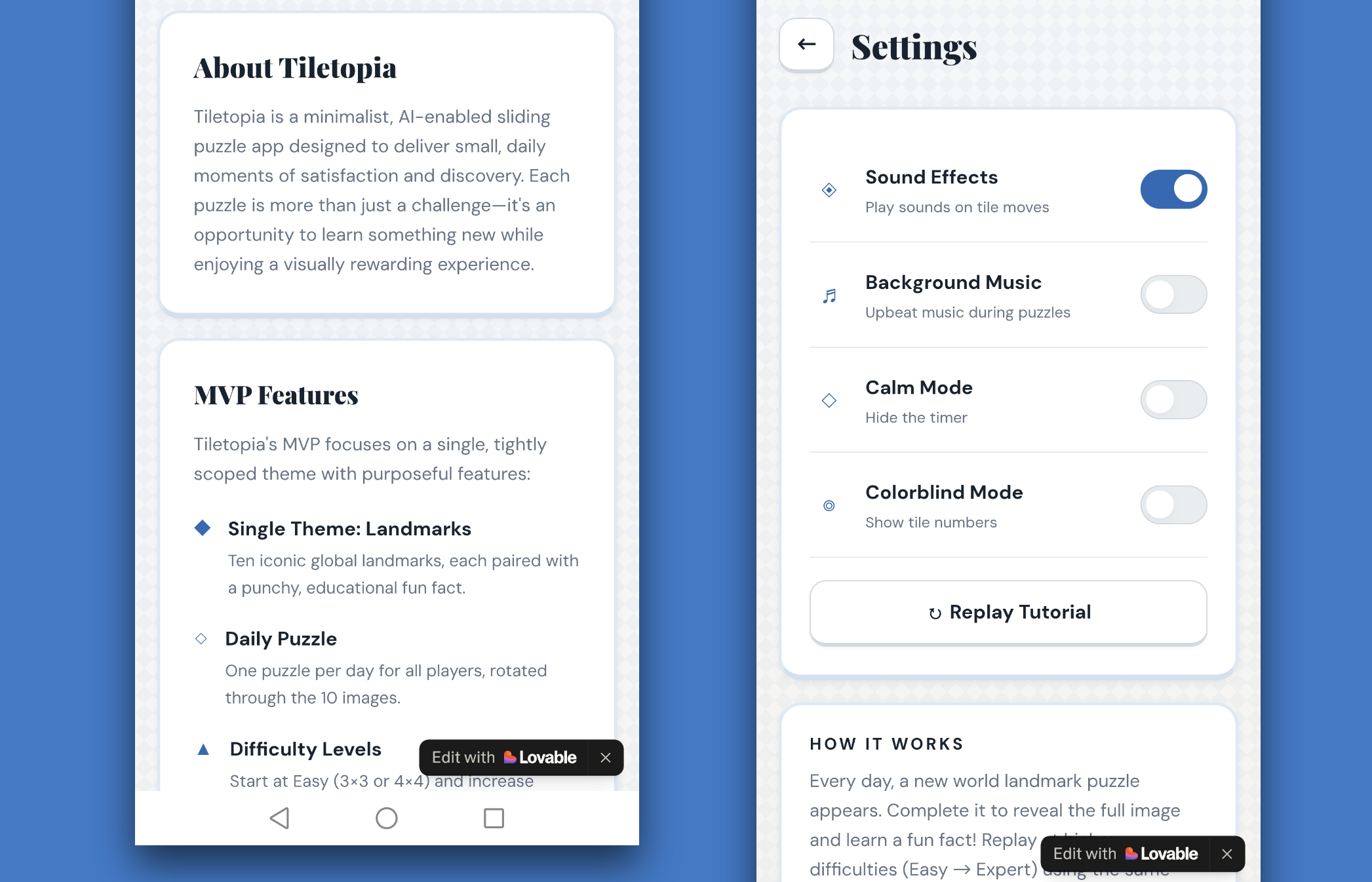

I started by framing the problem: create a playable MVP in a single theme (10 images + 10 fun facts) with optional daily puzzles, difficulty progression, and replay mechanics. From there, I leaned on ChatGPT as a co-brainstorming partner:

• Scoping & differentiation: I asked it how to create an MVP “trivial enough to build fast, but rich enough to demonstrate AI as a co-pilot.”

• Structuring the experience: Together, we mapped daily puzzle rotation, difficulty labelling, and fun-fact reveals.

• Iterating through constraints: I pushed it to propose multi-theme and complex rotations, then deliberately rejected ideas that would overcomplicate the MVP or risk cognitive overload.

For assets, I initially generated AI images with Freepik, then iterated on image selection as ChatGPT suggested better fits for clarity, contrast, and puzzle readability. This highlighted where AI could accelerate output, and where human judgment remained essential.

I treated this not as UI craftsmanship, but as an experiment in measurable experience outcomes, considering and testing does the daily loop work? Can users engage and replay?

This focus on outcomes over output reflects the shift from design as ‘magic’ to design as an enjoyable measurable experience.

What AI decided vs. what I controlled:

• AI suggested rotation logic, difficulty schedules, individual game personalization, and puzzle presentation.

• I controlled scope, theme, asset selection, and the final MVP decisions, particularly around user comprehension, habit formation, and visual clarity.

Where AI accelerated thinking:

• Brainstorming daily puzzle mechanics and UX flows.

• Generating fun-fact text for multiple iterations quickly.

• Prototyping multiple difficulty schemes for review in minutes rather than hours.

Where AI hallucinated or created UX risk:

• Proposed 7×7/8×8 grids — too cognitively demanding for habit formation.

• Suggested overly basic UI elements (expected emojis), not interesting enough for emotional engagement.

• Proposed multi-theme rotations (5+ themes) that would dilute MVP focus.

• Generated images and facts that required careful vetting for accuracy and clarity.

“AI isn’t inherently trustworthy — we still have to consider design for trustworthy interactions, using transparency, human curation, and predictable patterns that reinforce confidence.”

By pushing AI to propose and exercising judgment to prune, I treated it as a creative co-pilot, not a replacement. Every hallucination became a prompt to reflect on overarching design principles and user experience.

This 48-hour experiment reframed how I think about design in AI-first organizations & AI-enbled projects:

Team structure:

Senior and lead designers become judgment curators; AI handles volume, iteration, and low-risk ideation. This is why I think design teams will eventually need smaller pods with AI leverage, not larger teams.

Review processes:

Review cycles shift from pixel-level inspection to oversight of the entire creative journey, including AI outputs, prompts, and validation of assumptions and risk. This experiment convinced me that design directors need to review prompts and systems, not just screens.

Design quality bars:

Principles and constraints must be explicit to prevent AI from producing work that is fast, but misaligned. This helps senior and lead designers to guide AI outputs toward experiences that are delightful, understandable — not just “good enough.”

Hiring expectations:

All designers either must excel or demonstrate strong potential in human-centered design, and in orchestrating AI as a co-pilot, guiding and challenging outputs rather than executing every detail.

AI & Trust:

All designers I believe will have a responsibility to guard the experience and trustworthy narrative of a product, experience or service, even when AI accelerates execution. This is key with deep fakes, AI realism, data & ethics resulting in people being skeptical about AI solutions.

One tension this experiment surfaced is legitimacy in AI-driven creativity. With AI being trained on past data, past patterns, and past power structures, making it excellent at remixing what already exists, but poor at originating what doesn’t. Left unchecked, it subtly pulls design toward the familiar, the safe, and allows teams to sleepwalk into mediocrity at sale.

While that may not inherently be a bad thing, it becomes dangerous if teams mistake speed for originality. This reinforces my belief that genuinely forward-thinking innovation cannot be delegated to AI. It will have to be actively protected by designers and leaders who are willing to challenge outputs, challenge the status quo, and drive hard to inject new perspectives; pushing teams beyond what AI tools confidently pump out. This is the only way to prevent teams from sleepwalking into mediocrity at scale.

If I were leading a design team here are some things I’d do based on this experiement:

• I’d institutionalize AI as a co-pilot while preserving judgment & creativity checkpoints.

• I’d stop (or significantly reduce) doing low-level design work manually, focusing instead on strategy, principles, and orchestration.

• I’d protect clarity of scope (scope creep), simplicity in MVPs and user-centered decision-making at all costs.

• I’d reinforce that AI cannot replace human insight, creativity, inspiration, or judgment, but can amplify human decision-making at scale.

• I’d encourage teams to think beyond screens: considering AI across connected multimodal context aware experiences with autonomous vehicles and robotics, wearables, IoT and smart environments already pioneering the way.

• I’d start small. Like this experiment to introduce AI to a Design team. Pick a constrained problem and short timeframe. Let AI generate ideas, iterate, and suggest paths. Make sure human judgment is deciding scope, quality, and alignment. Then use every hallucination as a learning opportunity to refine guidelines, guardrails, and principles.

While AI can accelerate creative options and iteration, it also accelerates risk. Judgment is (will be) the true differentiator.

This trivial experiment showed insights about future design processes with AI enabled mindsets which may change traditional team structures & sizes especially with role of an individal contributer becoming increasingly curatorial, not just creative. Their career success will now also rely on prompting, guiding or challenging AI outputs to align with user and business.

In a nutshell, it expanded them. While AI can accelerate creative options and iteration, it also accelerates risk.

Judgment is (will be) the true differentiator.

This trivial experiment gave me deeper insights about future design processes & practice with AI enabled mindsets. This is likely to change traditional team structures, sizes & specialisms, especially with role of an individal contributer becoming increasingly curatorial, not just creative. A designers career success will now also rely heavier on framing problems correctly, in addition to prompting, guiding or challenging AI outputs to align with user and business.

Bottom line:

This wasn’t about building a sliding puzzle. It was about developing my own mindset, proving a process and give me a perspective on how I would lead design teams and use AI responsibly in an AI-first world.

In a nutshell, it expanded them. While AI can accelerate creative options and iteration, it also accelerates risk.

Judgment is (will be) the true differentiator.

This trivial experiment gave me deeper insights about future design processes & practice with AI enabled mindsets. This is likely to change traditional team structures, sizes & specialisms, especially with role of an individal contributer becoming increasingly curatorial, not just creative. A designers career success will now also rely heavier on framing problems correctly, in addition to prompting, guiding or challenging AI outputs to align with user and business.

Bottom line:

This wasn’t about building a sliding puzzle. It was about developing my own mindset, proving a process and give me a perspective on how I would lead design teams and use AI responsibly in an AI-first world.

Beta study still ongoing, so far 5/20 users over 7 days. Feel free to become a tester.